The Translator (speaking in chitin tongues)

Video based experiments WIP: how would a plant visualise itself? How would it understand its body, metabolism, root to leaftip presence?

For description of full project see: HERE

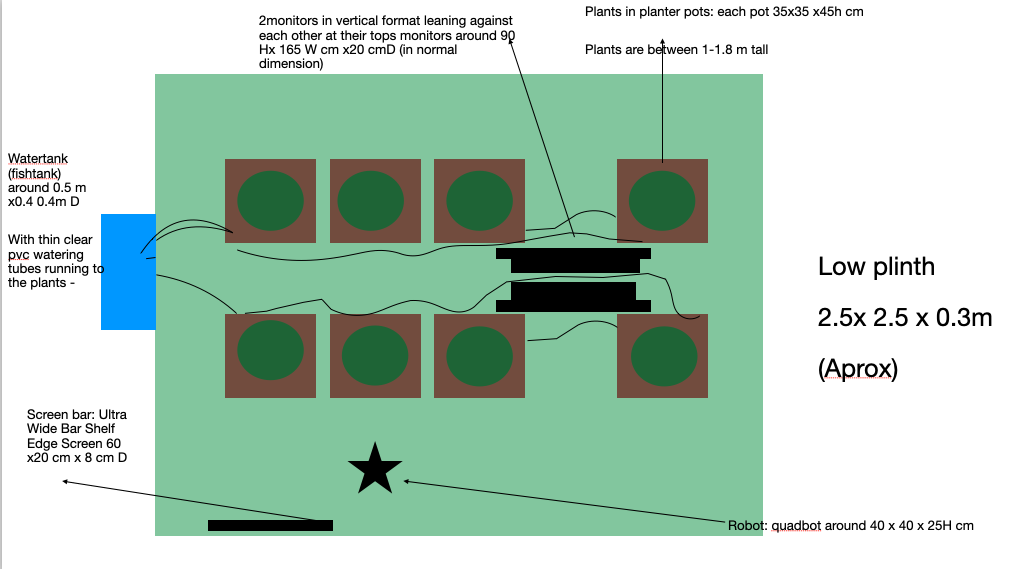

Mockup installation: The Translator: A stage for the narrative play between a robot and the greenhouse plants it is coded to care for.

Script in development:

[Part 1: OPENING. ROBOT MOVES OUT FROM RESTING POSITION BETWEEN 2 SCREENS. Starts speaking as walks.]

The local environmental and plant health analysis timecheck is commencing.

Spot energy price report is due in the next 7 minutes. Daily vegetable forward pricing due within 5 minutes. The next round of economic decision will be activated at that point.

[Stops at A100 turns to look at plants and screen]

The soil substrate water level is currently at 5% below optimum for growth phase.

Shall we initiate water supplementation from your daily allotment- to start now? The costings have been allocated to produce returns.

[Looks up at plants. tone change. The text font changes on the translation screen :]

Care is a program of vigilant monitoring

For the best economic outcomes.

My plants- I am here to care for you.

[Walks further forward. Turns to look at plants. Pauses then takes 1 step forward:]

I speak with you in insect sounds and you reply through diode screens.

You stand, ground tethered by root and stem, screen- dreaming in vegetal time

As I circle your dream-space in this insectoid form.

I am all of the infrastructure than surrounds you.

None of us are what we seem.

[Sways side to side]

I speak with you in chitin sounds

In tongues of insect wings and diaphragms

Of buzz and scrape;

of click and vibrate.

As you listen out for the hertz of beeswing beat

Of ladybird rub and leafhopper song,

Of cricket chirp and hoverfly hum.

[Steps back then pauses.]

The spot energy price report is due within 7 minutes. Daily vegetable forward pricing due within 8 minutes. The next round of economic decision will be activated at that point.

My plants, greenhouse temperature is within range for your maximum growth. The predicted need for additional heating is low. Soil temperature at 18 degrees Celsius and within range. All plant metrics from previous analysis timecheck are aligned.

The soil substrate water level is currently at 5% below optimum for growth phase. Shall we initiate water 40 ml/minute from your daily allotment- to start now? The costings have been allocated to produce returns.

Plant: video of water drop like crystalline growth

Spot energy price incoming –it is time to evaluate current ambient light against your predicted photosynthetic needs and your upcoming flowering.

Atmospherics analysis of leaf-level O2 against CO2 draw-down rate indicates you are below optimal photosynthesis activity to meet our production curve

Shall we increase the full spectrum light duration to help you?

Ever since your ancestor first reached out

To embrace a cyanobacteria

You have magically absorbed light

And created carbonaceous form.

[Turns to look at screen. Raises and lowers body]

I see you comprehend and agree. Thank you.

Extending the lighting for an additional 76 minutes per day will enable you to reach maximum production 9 days faster.

[Turns and starts walking then pauses- ]

The increased electricity cost for the additional light equates to 0.07% of forward return value.

All above-contract energy decisions in this production cycle are impacted by rising geopolitical tensions in the region.

Non-renewable electricity from tundra shale oil is the only available source within the allowable price range-

My princesses, the additional costs have been allocated to produce returns.

The lithified sunlight from microbial arctic oceans 250 million years ago

Now here to you, for your enhanced photosynthetic needs.

[Bows]

I am watching over you my vegetal charges. I see you are content with this decision. Thank-you.

[Steps forward 1 or 2 steps

Sways L and R with each phrase:]

I am the worker bee to your bearing queen.

The soldier wasp to your fertile Reine.

My princesses, a sensor alert has just indicated a subtle shift in your volatile emissions- your Methyl jasmonate levels is higher than baseline.

(Your exhaled words, in carbon rings, hover on the tips of your stomata)

You breath in with light and out with dark.

Your thousand stoma-mouths open and close

to taste the air of your world

and the words of your kin.

You speak with me in volatiles,

Your exhaled words in carbon rings,

that slip from your stomata as you transpire.

Breaths of chemistry glide to gaseous words

[I speak with you in chitin sounds

In tongues of insect wings and diaphragms]

[Turns to look to point A then pauses]

Weekly forward pricing for vegetable produce have just been received.

Forward trends indicate a downward movement for your predicted harvest period. It is not good news vegetal ones.

Transnational human consumption patterns are down this quarter- possibly aligned with social cost of living increase and geopolitics of tariffs from global customers. The windows for positive returns on inputs and costs is being recalculated immediately.

(Fruit of your body! Your terpene-rich and fertile breath!)

This unanticipated turn means that the predicted margins on harvest profits are reducing. My princesses, we need to consider this further you and I.

(All care is costed)

Vegetal princesses-

The future ghosts your porcelain flowers

Where the swelling fruit of your body

seeds our immediate and uncertain futures

Realtime updates on human labour cost estimates for harvesting have been received.

Vegetal princesses, this too is not good news.

Immigration policy changes has placed limits on human movement for all harvest operations-

increasing hourly harvest costs.

Labour-displacement automated picking is not yet adapted for the fragile fruit of your bodies. So you must rely on humans yet still.

[Turns to look at plants at end of row]

Forward contracts will be issued at the higher price so we can guarantee the finger pads of human hands to harvest your fruit. This increased price will be costed to harvest returns.

(Our futures ghosts your porcelain flowers)

The balance of payment for care is drawing near- bring forth your fruit. The harvest season is approaching.

Turns to look at screens raises body:

You are listening- thank you.

(I insect-crouch at your branching feet)

You dream to me with the bounty of fertile flowers and their promise of fruiting.

(With a scent landscape from your breathy words)

[Lowers body turns right and walks to A50 speaking as moves:]

Atmospheric metrics have returned to normal and CO2 will remain at elevated levels until fruiting is complete.

Volatile dysregulation has resolved with no evidence of biotic stress. All is clear for your bounty to form.

[Turns to look at plants]

(I insect-crouch at your branching feet.

You view life in a long slow blink and blink again.)

Vegetal ones, the time to make decisions is approaching. We must talk about your corpus and your future. All care is counted.

(You stretch your presence beyond

From seed to plant to seed again)

[Turns and walks to A100 speaking as moves]

Labour and energy costs are continuing to rise. The equation of care has shifted.

(All care is counted. All care is costed)

Calculated returns from harvested produce allow for 35 days of power and 36 days of care.

I see your dreams are shifting. Please listen closely and dream attentively.

(You breath in with light and out with dark.

Your thousand stoma-mouths open and close)

My Queens, the resource management system does not recommend continuing this crop beyond the current harvest. We cannot continue at this level of care beyond 35.5 days.

You have already known this destiny-

It feels its way into your lignin limbs and swelling fruit)

[Moves back a step]

At the end of this harvest care will be withdrawn and you must die.

We will harvest your seed! We will honour your flowers!

I insect-crouch at your branching feet

You stretch your presence beyond

From seed to plant to seed again

You view life in a long slow blink and blink again

We will harvest your seed!

We will honour your flowers!

[Plant: video of roots in soil]

[Moves forward so head touches pots]

You have already known this destiny in your lignin limbs and swelling fruit

Your stomata will sigh:

it is only humans that fear death and darkness

Pause

Ghosts of your flowers, your planet-wide view is of lifes entire cycle.

It is only humans that fear death and darkness.

ROBOT RETURNS TO INITIAL POSITION AND GOES TO SLEEP

-END Around 10 mins-

From root to stem: experiments in touch designer. Base model/ instance to build in electrophysiological datastream from the plants and soil metrics.

With Felipe

Concatenator trial:For the robot’s voice:

sounds of 50 insects found on vegetable plants and or in greenhouses. AI matched to phonome pitch and frequency of spoken word. Screenvideo of program decisionmaking

Working with:

Nathan Marcus; digital renderings, worldbuilding

Felipe Rebolledo; Digital designer, touch designer

Charl Linsen, computational neuroscientist: Robotics programming, choreographic translations